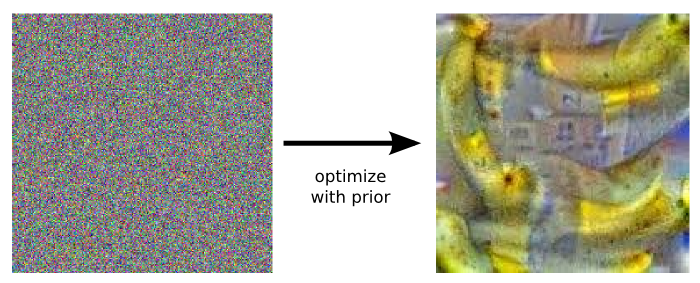

There’s a fun post over on Google’s Research Blog detailing the “dreams” of neural networks, generated by feeding random noise images into the networks and ‘nudging’ the image iteratively toward a certain classification. While the details are somewhat different, this is a great visual analogue for the processes used in Susurrant to expose the workings of its own audio “interpretation” algorithms.

I saw some folks responding to that article with a desire to apply the technique to audio, which is an integral part of what this tool aims to accomplish.

I hope soon to supplement the Susurrant demo with a narrative explanation of what each topic “sounds like,” both to me and the machine.